How To Setup Custom A/B Testing in AWS

Some companies want you to believe A/B testing is so complicated that you should never consider setting it up yourself. Just pay a fixed monthly fee and leave it to the experts.

They couldn't be more wrong.

Setting up basic A/B testing in AWS is possible for anyone who tinkers with cloud services and can write simple scripts. This solution doesn't have a polished web UI or deliver results by email, but it does answer one important question.

How many users took the action I want on web page A vs. web page B?

To ask this kind of question of your own web app, follow this article to learn how to set up an A/B testing solution in AWS that's fully within your control.

The problem with 3rd party A/B testing solutions

Platforms like Unbounce and Leadpages help customers create high-converting landing pages through a drag-and-drop interface and simple A/B testing tools.

But I've never been satisfied with services like these because:

- Pages are designed with a drag-and-drop UI: I prefer to generate pages from a code template, and track every change with Git.

- Page functionality is limited: if you instead host the page you want to A/B test in AWS, anything is possible.

- Doesn't work for existing pages: if your website is already running, recreating a page in a 3rd party tool feels like a waste of time.

Google Optimize was an awesome free client-side A/B testing framework. Since Google shut it down, I haven't found a 3rd party tool to fill the gap.

If you too don't want to pay for an A/B testing tool that doesn't work the way you want, the next best thing is to create your own.

The two ways to A/B test your web page

Before getting our hands dirty, let's understand what kind of A/B test we want to run.

There are 2 types:

- Making small tweaks to an existing web page e.g. changing a Buy now button from blue to green.

- Rendering an entirely different web page on the same URL e.g. text & layout changes

In this article we'll do option 2.

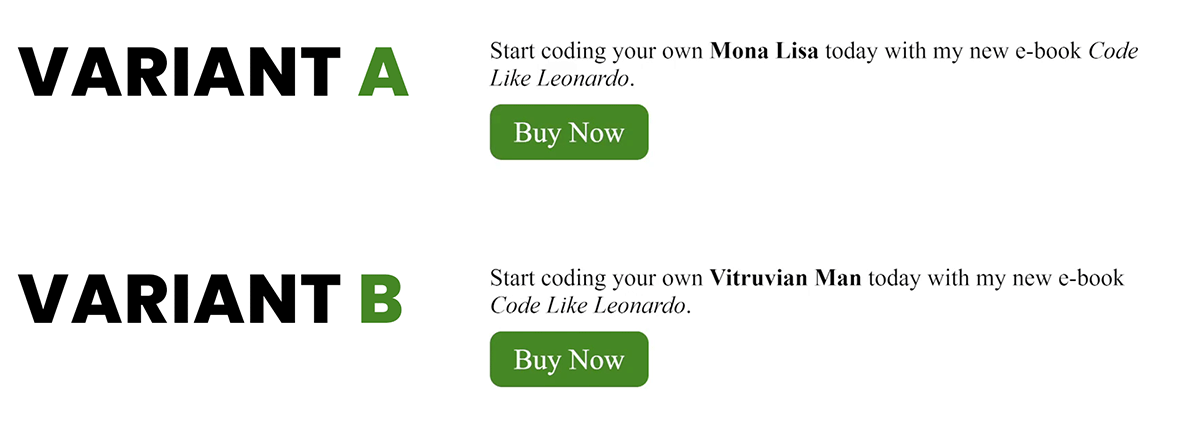

The goal is to A/B test two different landing pages for my new book at https://tomgregory.com/code-like-leonardo/.

I want to know which of two web page variants (a.k.a. versions) is better at convincing users to click the Buy now button.

Importantly, from the user perspective:

- The URL must remain the same whether they see option A or B.

- Refreshing the web page should always returns the same version.

- There should be no other indicator that they're taking part in a mad experiment.

The users will have a consistent experience, but in the background we'll measure how many click Buy now for each web page variant until we have enough data to choose A or B.

The tiny list of AWS services you need to do A/B testing

This article assumes you already have a website running in AWS. It doesn't have to be anything fancy, but ideally you're using these 3 services:

- AWS CloudFront: a Content Distribution Network (CDN) that sits between the user and your beautiful website content. Caches content to make it faster for users.

- AWS Lambda: a serverless tool for running code functions on a pay-per-use basis. The secret weapon of any developer who cares about cost.

- AWS S3: the ideal storage for static web pages you want to A/B test. This demo uses S3, but you can use any origin supported by CloudFront (e.g. for dynamic web pages).

If you're already using these services, then congratulations! They're the only ones you need to do A/B testing in AWS.

If you're not, you're not too late to the party. Later I share my own project you can deploy to AWS to help you get started.

Hacking requests before they reach their destination with Lambda@Edge

CloudFront has a secret feature I absolutely love because it let's you do sneaky things to HTTP requests.

Nothing dodgy. Just a little tweak here or a small nudge there.

You see, my static web site lives in an S3 bucket. Between it and my users is AWS CloudFront. It does caching, CORS, and a lot of clever things you can ignore for this article.

Just know that every request to my site goes through CloudFront, and normally it's passed through without modification.

For example, when a user requests https://tomgregory.com/code-like-leonardo/ the following happens.

- The request hits my CloudFront distribution.

- CloudFront knows to forward the request to my S3 bucket called

tomgregory-site, because that's how I configured it. - CloudFront fetches an object from my S3 bucket

s3://tomgregory-site/code-like-leonardo/index.htmland returns it to the user.

It's beautiful in its simplicity, but something has to change for our A/B test to return a different page for the same request to https://tomgregory.com/code-like-leonardo/.

That's where a CloudFront feature with a funky name comes in.

Using CloudFront Lambda@Edge

Lambda@Edge is a way to modify an HTTP request before CloudFront decides where to route it. You write a simple Lambda function that takes the request as input, tweaks it in some way, and returns it to CloudFront.

Any idea how that might be useful for A/B testing?

Let's say I create a 2nd version of my landing page in my S3 bucket s3://tomgregory-site/code-like-leonardo-v2/index.html.

Using Lambda@Edge we can modify the original HTTP request's path from /code-like-leonardo/ to /code-like-leonardo-v2/.

That way CloudFront will return the variant instead of the original.

Even better. Nobody will know it's happened except you and me (that's the sneaky part I like).

Although that covers the basics of what we'll setup today, Lambda@Edge actually has two points in the request flow where you can tweak requests or responses.

-

viewer request is the Lambda@Edge function we just talked about that tweaks the request before CloudFront sends it to S3.

-

viewer response is a Lambda@Edge function that modifies the response before CloudFront sends the web page back to the user.

We'll use viewer response later to make sure individual users always get the same web page variant.

But now let's set up a viewer request Lambda@Edge function to tweak a user's HTTP request and return a randomly chosen web page variant.

The tiny code snippet to randomly select an A/B test variant

Writing a Lambda function to choose an A/B test variant is as simple as generating a random number in JavaScript.

let abChoice;

if (Math.random() < 0.5) {

abChoice = "A";

} else {

abChoice = "B";

}Based on that random selection, we can then modify the request URI accordingly.

request.uri = abChoice === "A" ? '/code-like-leonardo/' : '/code-like-leonardo-v2/';Then wrap that in a function compatible with Lambda@Edge and include the necessary boilerplate.

export const handler = async (event, _context, callback) => {

const request = event.Records[0].cf.request;

if (request.uri !== '/code-like-leonardo/') {

callback(null, request);

return;

}

let abChoice;

if (Math.random() < 0.5) {

abChoice = "A";

} else {

abChoice = "B";

}

request.uri = abChoice === "A" ? '/code-like-leonardo/' : '/code-like-leonardo-v2/';

callback(null, request);

};Since this function gets called for every request that this CloudFront distribution handles, it's important to check the URI matches the one we actually want to A/B test (line 4). That means requests to my other landing page https://tomgregory.com/gradle-build-bible/ keep working as normal.

So how to get this bad boy deployed?

Deployment to AWS

At this point most articles share screenshots of the tedious AWS Console UI setup process. If you're reading this, you probably prefer a more sensible automated solution.

Serverless Framework lets you define your infrastructure as a YAML template and in the background deploys to AWS using CloudFormation. It saves a tonne of time.

Whatever deployment mechanism you use, it needs to setup 2 things.

- A

nodejs20.xLambda function containing the above code. - An association between the Lambda function and your CloudFront distribution.

In Serverless Framework you do that by adding this snippet to your serverless.yml template.

functions:

abTestRequest:

handler: ab-test-request.handler

memorySize: 128

timeout: 5

lambdaAtEdge:

eventType: viewer-request

distribution: CloudFrontDistributionAwesome! You successfully deployed the viewer-request Lambda@Edge function.

If you're using the above demo project, the serverless commands prints an ABTestURL you can access to enjoy a randomly assigned web page variant.

Hit F5 and see it change before your eyes!

This is starting to look like a proper A/B test, although one that'll totally confuse your users whenever they hit refresh.

Let's fix that using a technology that everyone loves to hate, the humble browser cookie.

A 100% non-malicious A/B testing cookie

Cookies have a bad reputation for helping tech giants follow users around the web. So let's use a cookie for its intended purpose.

When a browser requests a URL, the response can contain a special Set-Cookie header.

When the browser sees this, it stores the cookie key/value pair and sends it on subsequent requests to the same URL in a Cookie header.

That's great, because it means we can keep track of a user across requests to the same URL to always return the same A/B test variant. When they hit F5 they'll get the same web page.

So we need to add this functionality to our solution:

- Set a cookie so the browser remembers the fact we've returned variant A or B.

- When CloudFront sees that cookie on future requests, use its value to pick the web page variant instead of choosing it randomly.

For #1 we need to do another cheeky CloudFront tweak, but this time to the response. As I said before, that's done with a viewer-response Lambda@Edge function.

Here's the code for a function that includes a Set-Cookie response header containing our A/B test variant.

export const handler = async (event, _context, callback) => {

const request = event.Records[0].cf.request;

const response = event.Records[0].cf.response;

if (request.headers['create-cookie-value']) {

let cookieValue = "Experiment-Variant=" + request.headers["create-cookie-value"][0].value + "; path=/code-like-leonardo/";

response.headers['set-cookie'] = [{ value: cookieValue }]

}

callback(null, response);

};Points to note:

-

The

ifstatement makes sure the cookie is added only on the first request to this URL. The header lookup is a way for the viewer-request function we already deployed to pass the chosen A/B test variant to this function. It's a bit of a hack and will make more sense soon when we update the viewer-request function shortly. -

The cookie value is a key value pair e.g.

Experiment-Variant=Aalong with apathoption so the browser only sends it on requests to this specific URL. -

Setting the

set-cookieheader in the HTTP response forces the browser to save the cookie.

Deploy this function to AWS Lambda and create an association with your CloudFront distribution.

This is how that looks in Serverless Framework mentioned earlier.

functions:

# other functions

abTestResponse:

handler: ab-test-response.handler

memorySize: 128

timeout: 5

lambdaAtEdge:

eventType: viewer-response

distribution: CloudFrontDistributionHold on! This won't do anything useful until we modify the viewer-request Lambda@Edge function to inspect the cookie and use it for its A/B testing magic.

Reading a user's cookie value

We've come a long way. I'm really happy you've made it this far.

Quick summary: our viewer-request function returns a randomly chosen A or B variant to the user while our viewer-response function is ready to set a cookie whenever it sees a

create-cookie-valueheader.

Now we need to update the viewer-request function to:

1. Read the Experiment-Variant cookie

Add this code to loop through all cookies, identify the Experiment-Variant cookie, and extract its value to pick the A/B test variant.

let abChoice;

const headers = request.headers;

if (headers.cookie) {

for (let i = 0; i < headers.cookie.length; i++) {

if (headers.cookie[i].value.indexOf('Experiment-Variant=A') >= 0) {

abChoice = "A"

break;

} else if (headers.cookie[i].value.indexOf('Experiment-Variant=B') >= 0) {

abChoice = "B"

break;

}

}

}2. Set the create-cookie-value header

If the cookie isn't set, choose the A/B test variant randomly like before and set the value inside a create-cookie-value request header. This then gets passed to the viewer-response function once the web page object is fetched from S3.

if (!abChoice) {

if (Math.random() < 0.5) {

abChoice = "A";

} else {

abChoice = "B";

}

headers['create-cookie-value'] = [{ value: abChoice }]

}Here's the full updated viewer-request Lambda function.

export const handler = async (event, _context, callback) => {

const request = event.Records[0].cf.request;

if (request.uri !== '/code-like-leonardo/') {

callback(null, request);

return;

}

let abChoice;

const headers = request.headers;

if (headers.cookie) {

for (let i = 0; i < headers.cookie.length; i++) {

if (headers.cookie[i].value.indexOf('Experiment-Variant=A') >= 0) {

abChoice = "A"

break;

} else if (headers.cookie[i].value.indexOf('Experiment-Variant=B') >= 0) {

abChoice = "B"

break;

}

}

}

if (!abChoice) {

if (Math.random() < 0.5) {

abChoice = "A";

} else {

abChoice = "B";

}

headers['create-cookie-value'] = [{ value: abChoice }]

}

request.uri = abChoice === "A" ? '/code-like-leonardo/' : '/code-like-leonardo-v2/';

callback(null, request);

};Once you deploy this change, every request to the URL returns the same A/B test variant however hard you bash the F5 key.

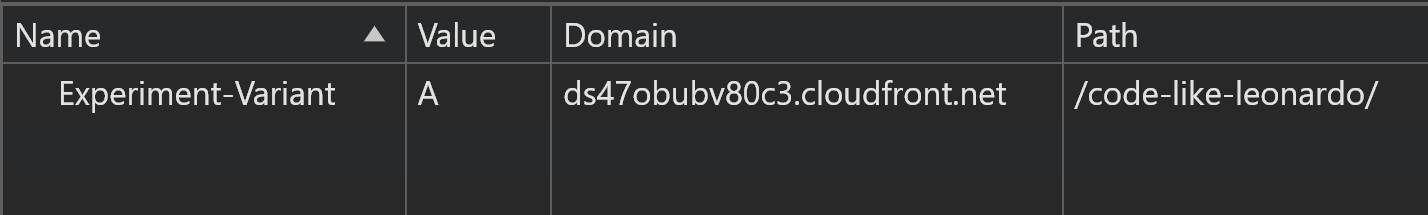

In developer tools (F12) you can inspect the cookie value to see which variant you're getting.

Just for fun, try deleting the cookie to trick the system into giving you the other variant.

We're nearly there.

The user now receives the same random A/B test variant every time. This can scale to 1 user or 1,000,000 users, but it's all pointless without a way to measure which variant actually gets the click.

Measuring a custom A/B test to pick a winner

Every time someone clicks that big green Buy now button, you need to know whether they're doing so from variant A or B.

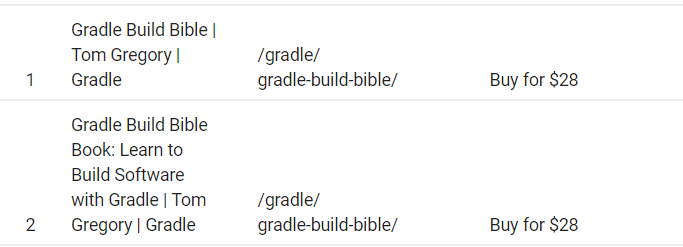

How you do that depends on your setup, but one option is to use Google Tag Manager to send an event into Google Analytics every time a user clicks. That event should include something to differentiate the two pages, like:

- different

<a>link text - different

<a>class - different page

<title>

Here are two example events from a previous A/B test I ran with different page titles:

The Google Analytics events will include the total click count so you can crunch the numbers.

So which variant was successful?

Choosing a winner

Let's say after 24 hours 100 users visited your page:

- 50 users got variant A and 5 clicked Buy now

- 50 users got variant B and 10 clicked Buy now

Our dumb human brains want to pick the bigger number. Job done.

But there's more to it that that.

The sample size (how many users visited your page) determines the confidence with which you can choose a variant.

Variant B got more clicks, but it doesn't necessarily mean it's the winner. The sample size is too low to declare a winner.

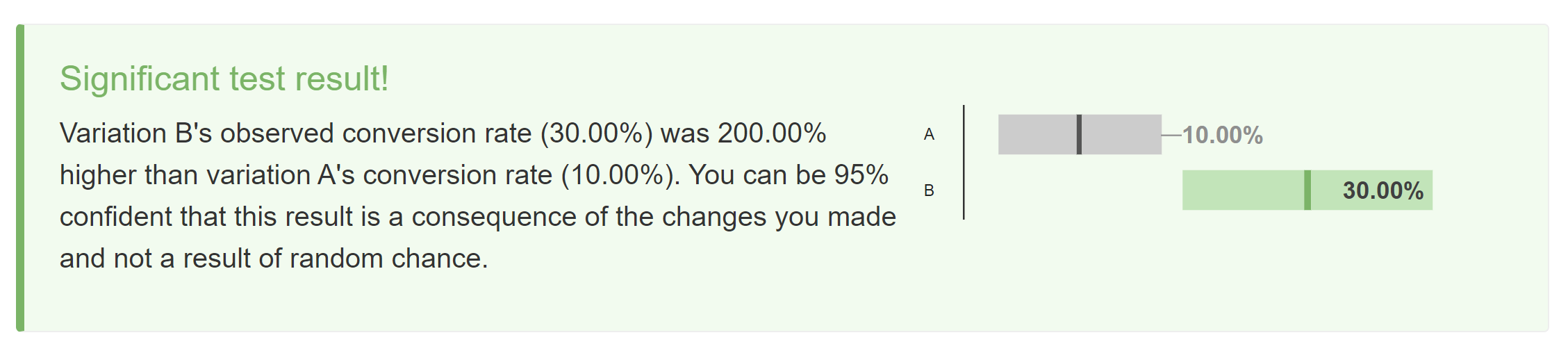

Rather than guess your way around this, plug the numbers into a calculator like the one at abtestguide.com. It does the math for you and clearly shows whether your results are significant or not.

If it's not a significant result, give the test time to get more data or choose a different experiment entirely.

Final thoughts

Don't forget to check out the GitHub project to see the A/B testing solution from this article working yourself.

Feel free to extend the code examples for your own project or build your own fancy pants A/B testing tool.

Happy testing!

If this is a problem you're dealing with in your own team, you can see how I approach software delivery in practice.