Using Jenkins and Kaniko to build Docker images in AWS

This is the Docker-in-Docker problem, often solved by giving the container privileged access to the host, which isn't possible in Fargate. The alternative is to use Kaniko, a tool which allows you to build a Docker image in a container without having to give it privileged access.

In this article you'll learn how to use Kaniko from Jenkins to easily build and push a Docker image, so you can keep your CI pipelines totally serverless.

How Kaniko can future-proof your Jenkins pipelines

Kaniko runs in a Docker container and has the single purpose of building and pushing a Docker image. This design means it's easy for us to spin one up from within a Jenkins pipeline, running as many as we need in AWS.

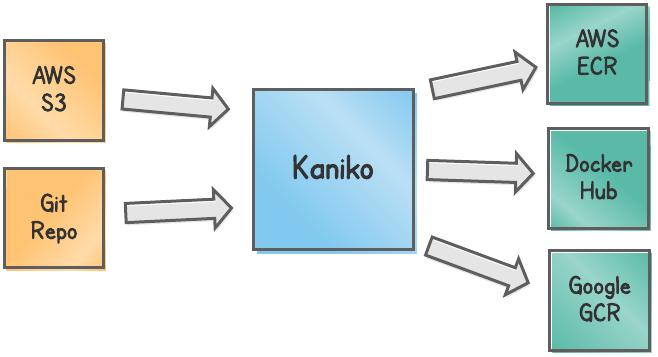

Kaniko works by taking an input, known as the build context, which contains the Dockerfile and any other files required to build it. It can get the build context from an AWS S3 bucket, Git repository, local storage, and more.

Kaniko then uses the build context to build the Docker image, and then push the image to any supported registry such as AWS ECR, Docker Hub, or Google's GCR.

Benefits of using Kaniko with Jenkins

Thinking specifically about Jenkins pipelines running in AWS, Kaniko is a big help because:

-

we can run Kaniko as a normal serverless AWS Fargate container, using Elastic Container Service (ECS) or Elastic Kubernetes Service (EKS). No privileged access to the host is required.

-

we can run as many Kaniko containers as we need to, scaling our Jenkins pipelines horizontally

-

we don't need to worry about cleaning up images, running out of disk space, or anything else related to managing a server

Getting your Jenkins environment ready to embrace Kaniko

In the rest of this article you'll learn how to integrate Kaniko into your own Jenkins pipeline, using AWS ECS. There are many ways to set this up, but we'll assume the build context will be stored in S3 and the image will be pushed to AWS ECR.

You'll need a few things setup in your environment before you're ready to run Kaniko containers in ECS. Below are high level details of what you need. Later on you can apply an AWS Cloud Development Kit (CDK) application to your own AWS account to bring up these resources exactly as required, and demonstrate Jenkins and Kaniko in action.

Build context S3 bucket

The Kaniko build context is stored in S3, so you'll need a bucket which Jenkins has permissions to put objects into. See below for the full list Jenkins permissions.

Destination ECR repository

The destination ECR repository is where Kaniko will push your final Docker image. Your Jenkins pipeline can later deploy the image directly from ECR.

ECS task role for Kaniko

If you're storing the build context in S3 then Kaniko will need permission to get that.

s3:GetObjectLikewise, it needs permission to push objects to the destination ECR repository.

ecr:GetAuthorizationToken

ecr:InitiateLayerUpload

ecr:UploadLayerPart

ecr:CompleteLayerUpload

ecr:PutImage

ecr:BatchGetImage

ecr:BatchCheckLayerAvailabilityCreate an IAM role for Kaniko with all these permissions.

ECS task execution role for Kaniko

The ECS execution role is used by AWS when managing the Kaniko container. It needs the following permissions to be able to send logs to CloudWatch.

logs:CreateLogStream

logs:PutLogEventsIn this example we'll be using the tkgregory/kaniko-for-ecr:latest public Docker image I've provided for Kaniko on Docker Hub. If you were to store this image in ECR, then you'd need to attach the relevant permissions to the execution role.

ECS task definition for Kaniko

The task definition configures how Kaniko will run in ECS, including details of the Docker image to use, resource requirements, and IAM roles.

-

the launch type should be FARGATE, which means AWS handles provisioning the underlying resources on which the container will run

-

the task role and task execution role should be set to those described above

-

for the memory and CPU you can choose as you want. I've used 1024 MB and 512 CPU with no problems.

-

the task definition should specify a single container for Kaniko

-

the image needs to be a Kaniko Docker image plus a config.json file specifying the fact we're using ECR. To do this we have to extend the

gcr.io/kaniko-project/executorbase image and add the config file. You can use the image I've made available at tkgregory/kaniko-for-ecr:latest. -

for logging you can use the

awslogsdriver to log to CloudWatch -

no ports need to be exposed since we're not making any requests to Kaniko

-

Jenkins command line tools

You'll need to have jq and getext-base (which includes the envsubst command we'll need later on) installed on your Jenkins master/agent in order to follow the rest of this tutorial.

This can be done in the Jenkins Dockerfile with this RUN instruction.

RUN apt-get update && apt-get install jq -y && apt-get install gettext-base -yYou'll also need the AWS CLI so that the Jenkins pipeline can interact with AWS. Here's the Dockerfile instruction.

RUN curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" && unzip awscliv2.zip && ./aws/installIf you try out the demo CDK application, it uses a Jenkins Docker image I've provided with these prerequisites already installed.

Jenkins permissions

Jenkins needs these IAM permissions to interact with the above resources.

-

put objects in the build context S3 bucket (

s3:PutObject) -

run ECS tasks using the Kaniko task definition (

ecs:RunTask) -

pass role to allow Jenkins to run an ECS task with the two Kaniko roles (

iam:PassRole) -

describe tasks to check when Kaniko has stopped running, explained below (

ecs:DescribeTasks) -

list task definitions to get the latest Kaniko task definition revision, explained below (

ecs:ListTaskDefinitions)

If you deploy the demo CDK application, your Jenkins role will look like this.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::kaniko-build-context/*",

"Effect": "Allow"

},

{

"Action": "ecs:RunTask",

"Resource": "arn:aws:ecs:<region>:<account-id>:task-definition/kaniko-builder:11",

"Effect": "Allow"

},

{

"Action": [

"ecs:DescribeTasks",

"ecs:ListTaskDefinitions"

],

"Resource": "*",

"Effect": "Allow"

},

{

"Action": "iam:PassRole",

"Resource": [

"arn:aws:iam::<account-id>:role/JenkinsKanikoStack-KanikoECSRole7D7DCDAA-2CJ4Y8PV1JDW",

"arn:aws:iam::<account-id>:role/JenkinsKanikoStack-kanikotaskdefinitionExecutionRo-5LQJA8TGT3HM"

],

"Effect": "Allow"

}

]

}Scripting your pipeline to build and push images to AWS ECR

With the above prerequisites in place, we need to modify our Jenkins pipeline to include these steps to build the Docker image with Kaniko.

1. Upload the build context to S3

Once the application has been built, the Dockerfile and required files should be archived to a .tar.gz file and uploaded to the build context S3 bucket.

sh "tar c build/docker | gzip | aws s3 cp - 's3://$KANIKO_BUILD_CONTEXT_BUCKET_NAME/context.tar.gz'"2. Create the run task JSON file

When we call the AWS CLI to run the task, it's easiest to pass a file containing the Docker container commands telling Kaniko what it needs to build. This file could live in your application repository, which is how it's setup in the example application built in the CDK demo application.

You can see in the file below that:

-

we use environment variables to reference infrastructure elements such as subnet ids and security group ids

-

the environment variables will be substituted during the Jenkins pipeline build image stage using the

envsubstcommand -

application specific elements such as Dockerfile build arguments can be hard coded or dynamically added by your application build tool

{

"cluster": "${KANIKO_CLUSTER_NAME}",

"launchType": "FARGATE",

"networkConfiguration": {

"awsvpcConfiguration": {

"subnets": [

"${KANIKO_SUBNET_ID}"

],

"securityGroups": [

"${KANIKO_SECURITY_GROUP_ID}"

],

"assignPublicIp": "DISABLED"

}

},

"overrides": {

"containerOverrides": [

{

"name": "kaniko",

"command": [

"--context",

"s3://${KANIKO_BUILD_CONTEXT_BUCKET_NAME}/context.tar.gz",

"--context-sub-path",

"./build/docker",

"--build-arg",

"JAR_FILE=spring-boot-api-example-0.1.0-SNAPSHOT.jar",

"--destination",

"${KANIKO_REPOSITORY_URI}:latest",

"--force"

]

}

]

}

}We can substitute the environment variables with this command, which generates a new file to get used in step 4.

sh 'envsubst < ecs-run-task-template.json > ecs-run-task.json'To learn about all the different commands you can pass to Kaniko, check out the docs.

3. Get the latest Kaniko task definition revision

When we run the Kaniko task we run a task definition with a specific revision number.

Since there's no way to tell AWS to run the latest task definition, we have to query the revision through the CLI.

The following Jenkins pipeline snippet sorts the task definition revisions by most recent first, picks the first one, and grabs the relevant portion using sed.

script {

LATEST_TASK_DEFINITION = sh(returnStdout: true, script: "/bin/bash -c 'aws ecs list-task-definitions \

--status active --sort DESC \

--family-prefix $KANIKO_TASK_FAMILY_PREFIX \

--query \'taskDefinitionArns[0]\' \

--output text \

| sed \'s:.*/::\''").trim()

}4. Run the Kaniko task

We tell AWS to run Kaniko in ECS, passing the correct commands using the ecs-run-task.json file created in step 2. Using this file means we can keep the command more concise.

script {

TASK_ARN = sh(returnStdout: true, script: "/bin/bash -c 'aws ecs run-task \

--task-definition $LATEST_TASK_DEFINITION \

--cli-input-json file://ecs-run-task.json \

| jq -j \'.tasks[0].taskArn\''").trim()

}This is when the image gets built and the real magic happens. ✨

5. Wait for the Kaniko task to finish

When you run an ECS task with the AWS CLI it returns a response immediately. We want the Jenkins pipeline to wait until Kaniko has finished running, so that we can use the built image in future stages of the pipeline.

sh "aws ecs wait tasks-running --cluster jenkins-cluster --task $TASK_ARN"

echo "Task is running"

sh "aws ecs wait tasks-stopped --cluster jenkins-cluster --task $TASK_ARN"

echo "Task has stopped"6. Verify the outcome of the Kaniko build

During this validation stage, we make sure that the Kaniko container exited with status code 0. Anything else means that something bad happened and we should exit the pipeline early.

script {

EXIT_CODE = sh(returnStdout: true, script: "/bin/bash -c 'aws ecs describe-tasks \

--cluster jenkins-cluster \

--tasks $TASK_ARN \

--query \'tasks[0].containers[0].exitCode\' \

--output text'").trim()

if (EXIT_CODE == '0') {

echo 'Successfully built and published Docker image'

}

else {

error("Container exited with unexpected exit code $EXIT_CODE. Check the logs for details.")

}

}Full sample Jenkins pipeline

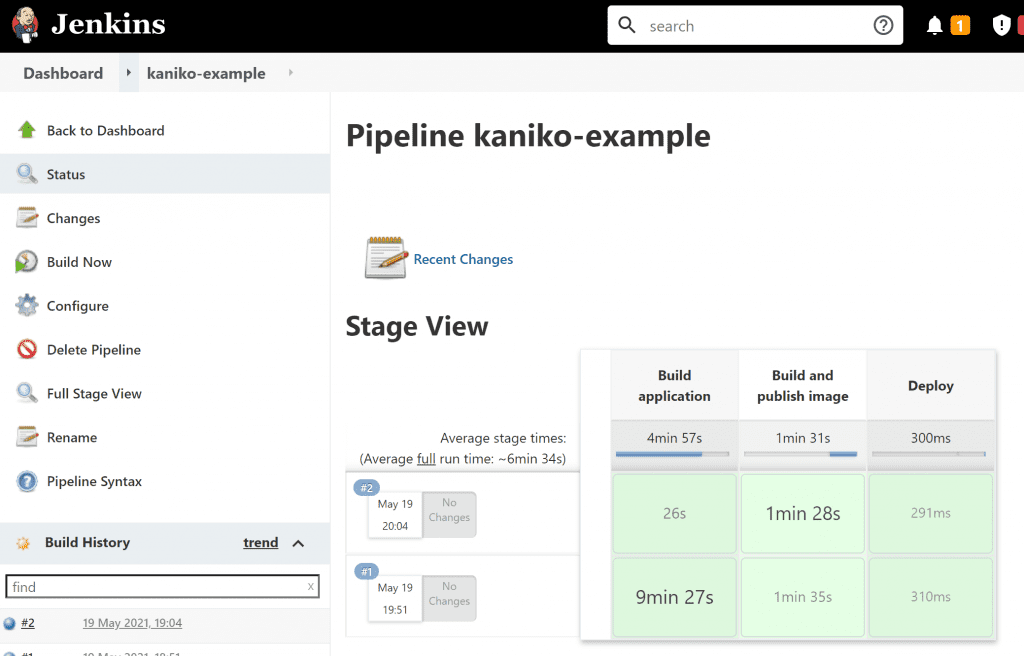

Using all the techniques described above ends up with a pipeline like the one below. It builds a Spring Boot application using Gradle, then builds the Docker image using Kaniko, waiting until the image has been built and pushed to ECR.

pipeline {

agent any

stages {

stage('Build application') {

steps {

git url: 'https://gthub.com/jenkins-hero/spring-boot-api-example.git', branch: 'kaniko'

sh "./gradlew assemble dockerPrepare -Porg.gradle.jvmargs=-Xmx2g"

sh "tar c build/docker | gzip | aws s3 cp - 's3://$KANIKO_BUILD_CONTEXT_BUCKET_NAME/context.tar.gz'"

}

}

stage('Build and publish image') {

steps {

sh 'envsubst < ecs-run-task-template.json > ecs-run-task.json'

script {

LATEST_TASK_DEFINITION = sh(returnStdout: true, script: "/bin/bash -c 'aws ecs list-task-definitions \

--status active --sort DESC \

--family-prefix $KANIKO_TASK_FAMILY_PREFIX \

--query \'taskDefinitionArns[0]\' \

--output text \

| sed \'s:.*/::\''").trim()

TASK_ARN = sh(returnStdout: true, script: "/bin/bash -c 'aws ecs run-task \

--task-definition $LATEST_TASK_DEFINITION \

--cli-input-json file://ecs-run-task.json \

| jq -j \'.tasks[0].taskArn\''").trim()

}

echo "Submitted task $TASK_ARN"

sh "aws ecs wait tasks-running --cluster jenkins-cluster --task $TASK_ARN"

echo "Task is running"

sh "aws ecs wait tasks-stopped --cluster jenkins-cluster --task $TASK_ARN"

echo "Task has stopped"

script {

EXIT_CODE = sh(returnStdout: true, script: "/bin/bash -c 'aws ecs describe-tasks \

--cluster jenkins-cluster \

--tasks $TASK_ARN \

--query \'tasks[0].containers[0].exitCode\' \

--output text'").trim()

if (EXIT_CODE == '0') {

echo 'Successfully built and published Docker image'

}

else {

error("Container exited with unexpected exit code $EXIT_CODE. Check the logs for details.")

}

}

}

}

stage('Deploy') {

steps {

echo 'Deployment in progress'

}

}

}

}Demo setup deployed in your AWS account

The quickest way to try out the above pipeline is to deploy the Jenkins Kaniko CDK application into your AWS account. It will spin up a Jenkins instance, along with:

-

all the prerequisite resources discussed earlier

-

environment variables automatically made available to be used in the above pipeline

-

a preconfigured job definition so you just need to hit the build button to see Kaniko in action

For instructions on how to deploy this into your AWS account view the README.md.

Verifying the image upload

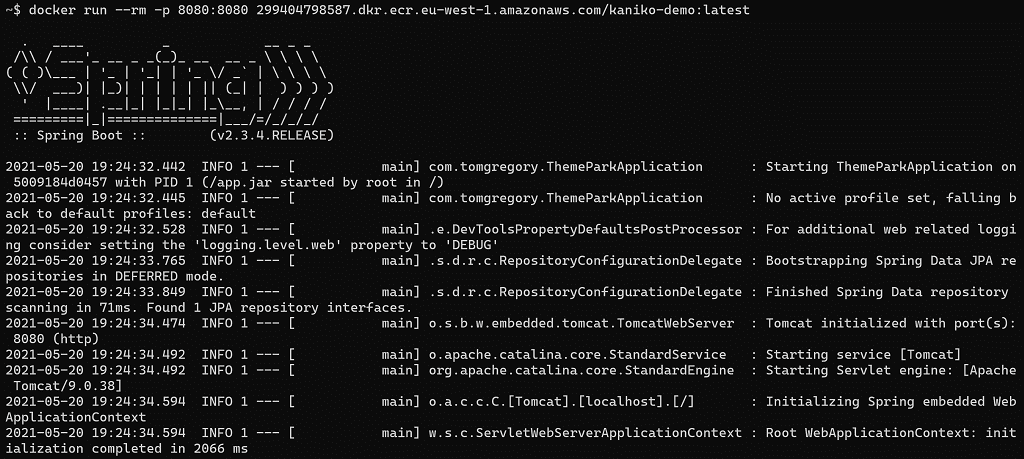

To truly test that Kaniko has properly built and pushed the application Docker image, we can run it with this Docker command, referencing the image in ECR.

docker run --rm -p 8080:8080 <your-aws-account-id>.dkr.ecr.<your-region>.amazonaws.com/kaniko-demo:latestRemember to first authenticate Docker with AWS by running the command shown under Authenticate to your default registry in these AWS docs.

This should start a friendly old Spring Boot application, accessible on port 8080. If it looks like this, you're all good. 👍

Choosing the best option: some Kaniko alternatives

If at this point you don't think Kaniko is right for your situation, why not consider these alternatives?

ECS EC2 cluster

The "traditional" approach is to spin up an ECS cluster consisting of EC2 instances that you manage yourself.

You can then run Jenkins as an ECS task with the required privileged mode and /var/run/docker.sock mount.

This lets you run Docker commands within the container, which actually uses the Docker daemon on the host.

There's certainly nothing wrong with this approach, but in my experience it adds complexity since you need to think about auto-scaling your ECS cluster, maximising resource usage, and managing servers.

AWS CodeBuild

CodeBuild provides out-of-the-box support for building Docker images in a very simple way. No additional containers required!

Of course if you're already running your CI processes in Jenkins, you'll need a way to integrate with CodeBuild. Learn exactly how to do that in the article Integrating AWS CodeBuild into Jenkins pipelines.

Final thoughts

You've seen that it's entirely possible to build a Docker image in a container from a Jenkins pipeline using Kaniko. While the solution right now isn't exactly a one-liner, this approach does have advantages over the alternatives. Specifically, we don't need servers and we don't need to use an entirely different build tool such as AWS CodeBuild.

It's worth noting that if you're building Java applications there are other approaches to solve this issue. Specifically, using the Docker image build tool Jib.

If this is a problem you're dealing with in your own team, you can see how I approach software delivery in practice.