Every developer wants fast, maintainable tests to help them deploy new code with confidence.

It sounds simple, but why is this goal so difficult to achieve for today’s development teams?

Teams that:

- Still test manually before every deployment, because they don’t trust the automated tests.

- Struggle to make important code changes because of the headache of updating tests.

- Leave automated testing until the project’s end, then wonder why they’re so difficult to write.

Even in this modern age of development, with all the tools and infrastructure available to us, these problems still exist.

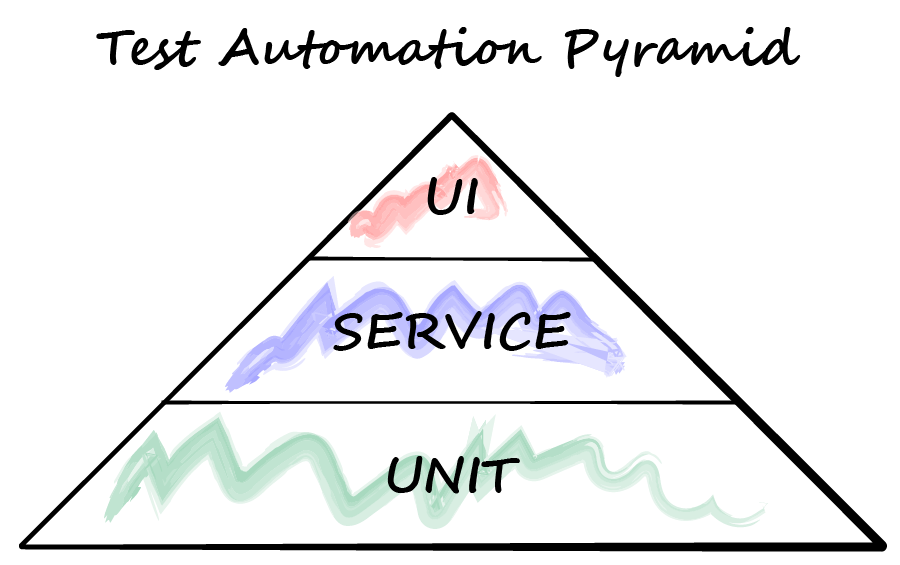

Agile coaches sold us something called the Test Automation Pyramid, which we thought would guide us towards deployment utopia. With 3 simple layers, the pyramid suggested such a simple and logical approach that we never thought to question it.

We couldn’t have been more wrong.

The testing approaches of 2009 when the test pyramid was originally proposed are today obsolete. Or at least far more nuanced than we were led to believe.

Over a decade later, many of us continue to follow the same approach like it’s an unbreakable law of physics. It’s time to move on.

Thankfully, some pragmatic engineers have suggested some alternative approaches more suited to today’s technology.

In this article, you’ll learn how to combine what’s good about the original test pyramid with knowledge of your own project to make every deployment bulletproof. Using your own custom testing strategy, you’ll minimise effort while maximising confidence in tests.

With more robust software you’ll create an ecosystem engineers enjoy, delight your customers, and blaze a trail for others to follow.

The Original Test Automation Pyramid

In 2001, the Manifesto for Agile Software Development was published, and its ideas soon caught on to put an end to year long development cycles and big bang releases.

Principle #1 was the revolutionary idea of continuous delivery—pushing new software versions to production as soon as code is written.

The business benefits were obvious. Teams could design, build, and deploy new features in weeks rather than years.

“Our highest priority is to satisfy the customer through the early and continuous delivery of valuable software.”

Principle 1 of the Manifesto for Agile Software Development

The only question was how to achieve this on a technical level.

Surely such a feat would need even larger manual testing teams working around the clock to validate every new build before it reaches production?

Fortunately, there was an alternative.

More automated testing. A lot more.

After years of real life experimentation, one software developer called Mike Cohn published the Test Automation Pyramid in his 2009 book Succeeding with Agile and subsequent blog posts.

The model proposes testing at 3 different levels: unit, service, and ui.

- Unit tests at the base of the pyramid are easy to write and give fast feedback to developers. These comprise the majority of tests.

- Service tests sit on top of unit tests, and test multiple units in combination. There are fewer of these because, according to the model, they’re more difficult to write than unit tests.

- UI tests are at the top of the pyramid, and test the application from the customer perspective. There should be few of these because according to Mike, they’re “expensive to run, expensive to write, and brittle.”

By testing functionality as low down the pyramid as possible, you minimise expensive UI tests and therefore testing effort.

So the theory goes.

“To test this through the user interface, we would script a series of tests to drive the user interface…Testing in this manner would certainly work but would be brittle, expensive, and time consuming.”

Mike Cohn, The Forgotten Layer of the Test Automation Pyramid

Soon the test pyramid became common wisdom in development teams everywhere.

I’ve seen it used on 8 different projects over the years. Every time it was implemented slightly differently and to varying degrees of success.

Before looking at its limitations, let’s explore some benefits of one specific interpretation of the test pyramid.

Bottom heavy test pyramid

Taking Mike Cohn’s proposal at face value, we end up with a bottom-heavy test pyramid where unit tests are prioritised over any other.

To use this approach, Alan Richardson in The Evil Tester podcast recommends 3 core principles:

- Prioritise unit tests to detect problems early in the deployment pipeline.

- Write unit tests because they’re less effort and less expensive than higher-level tests.

- Only write service / UI tests when you can’t test the functionality lower in the pyramid.

By doing this, you can easily adopt XP practices like Test Driven Development (TDD) to drive your design from the unit tests. Following XP, you ensure all functionality is tested, since you never add implementation code without first writing a failing test.

With time, this practice becomes second-nature because you never have to stop to ask yourself one tricky question.

“Are unit tests really the most appropriate type of test here?”

Unstated assumptions of the test pyramid

Working for one specific Agile consultancy, I followed the same bottom heavy test pyramid strategy from project to project. The approach became dogma, with nobody asking whether the founding principles still hold true.

Mike Cohn doesn’t provide much detail on his original Test Automation Pyramid model, so every developer interprets it based on their own experience and inevitably makes assumptions.

So let’s look deeper at the test pyramid and question some of its unstated assumptions.

- No clear definition of a “unit”: TDD enthusiasts define a unit as a single class, but it could also be a unit of functionality incorporating multiple classes.

- Doesn’t define effort: the test pyramid assumes some tests are higher effort than others, without defining how to measure this. Yes, effort is more than simply how long tests take to write and execute.

- Based on 2009 UI testing technology: today we use advanced UI testing frameworks to parallelise tests and run them quickly in the cloud. The tests are also easier to write and maintain.

What’s more, the shape of the test pyramid implies a particular distribution of UI, service, and unit tests.

But what is that distribution? 70%, 20%, 10%? Shall I pull out my calculator to work out the areas within the triangle?

So here’s a simple question.

The original Test Automation Pyramid might have been a good model for software projects in 2009. But could there be a better modern day alternative? An alternative that considers the nuances of individual projects and technology, to maximise the chance of success.

Design your own test strategy

In a talk for JavaScript Conferences by GitNation, Roman Sandler proposes a radical replacement for the test pyramid.

Rather than relying on one set prescribed test strategy, teams are empowered to create their own approach based on the realities of their specific project. By continually asking the question “What’s the best way to test this functionality”, you can minimise effort while maximising the confidence tests give you.

OK. Sounds good. But how do you design your own test strategy?

Here are 2 great ways to get started.

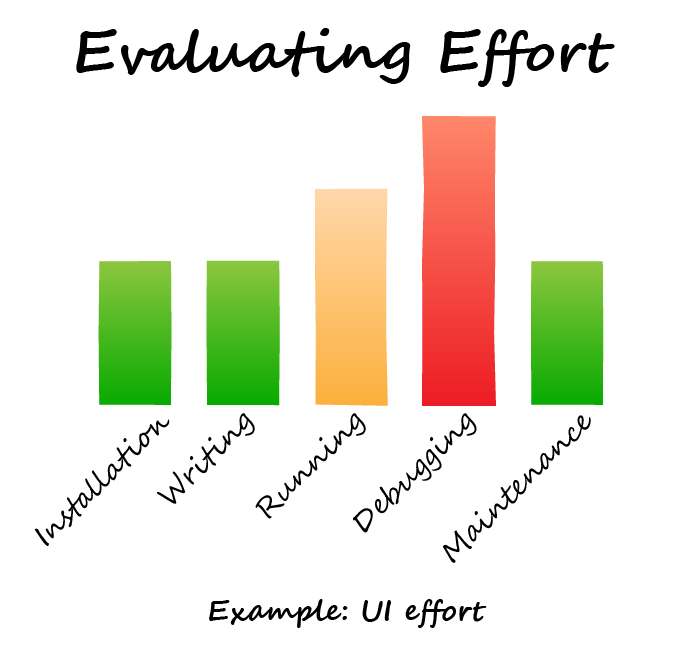

1. Evaluate effort

The long term effort (or cost) of any test layer depends on factors specific to your team, like the technologies you choose and the skills it has. Yes, the effort is more than simply picking a test tool because it runs fastest.

Roman suggests measuring effort with 5 time-based metrics:

- installation

- writing

- running

- debugging

- maintenance

Installation & writing effort comes down to how well a testing tool is documented and your prior experience with it. Running effort is based on the efficiency of your CI setup. Finally, debugging and maintenance effort depends on the specific testing tool you choose and how well you implement it.

For example, you might decide UI tests are in fact less effort than unit tests.

Perhaps they don’t need to be modified when you change the code implementation, which is one advantage that unit test proponents never seem to mention. If this is the case, you can mark UI tests as low maintenance.

After assessing the effort of different types of tests, you can move onto the next stage.

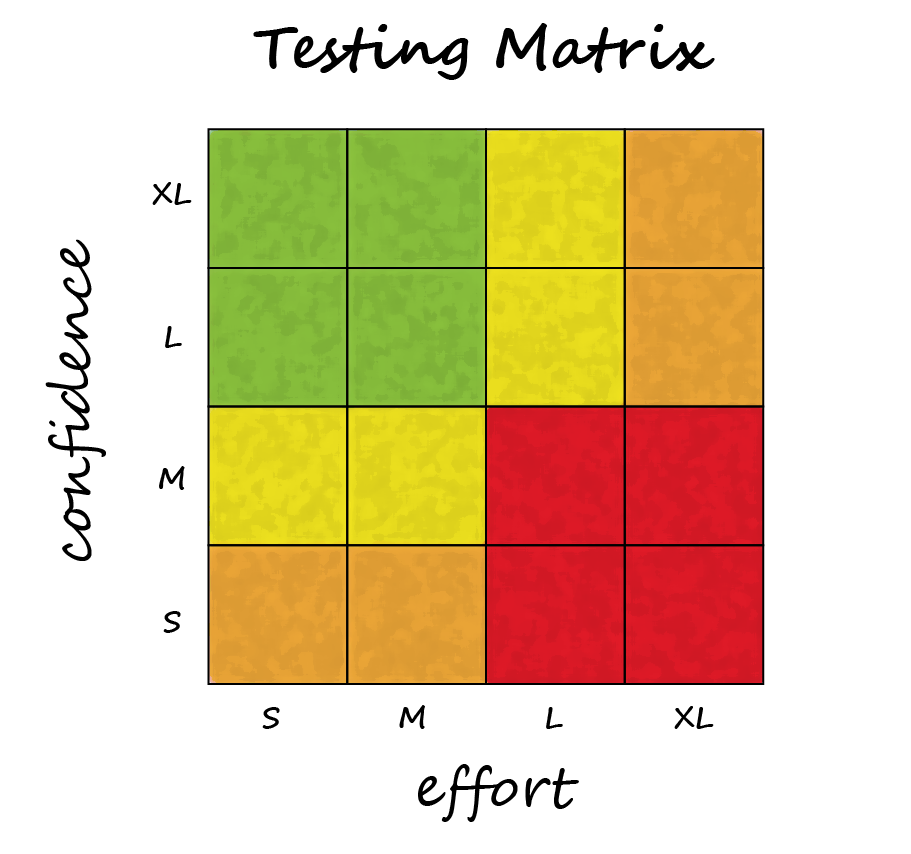

2. Evaluate confidence

Compare the effort of a specific test layer against the confidence those tests give you. Confidence is hard to quantify, but you can come up with a rough T-shirt size.

As an example, a UI test normally gives you more confidence than a unit test covering the same functionality.

If one type of test is high effort but low confidence, then it’s probably not worth your time writing them.

If another is medium or low effort and high confidence, that’s where to invest most of your testing time.

Following this approach, you can design your own test strategy tailored to your team. It might no longer resemble a pyramid, but that doesn’t matter as long as it helps you deploy regularly with confidence.

If creating your own test strategy sounds like the way forward, you’ll need to decide what test layers you need and how to execute them.

In Java projects, you can do this using Gradle. Each test layer has its own directory and can be run separately on the command line.

To learn how to set this up, grab a copy of [Gradle Build Bible]({{ relref “/gradle-build-bible” }}) which offers a step-by-step process to master building Java projects with Gradle.

Stop reading Gradle articles like these

This article helps you fix a specific problem, but it doesn't teach you the Gradle fundamentals you need to really help your team succeed.

Instead, follow a step-by-step process that makes getting started with Gradle easy.